| 12 |

Disease in long-term survivors |

This chapter will look at late manifestations of genotoxic exposure among the survivors, in particular birth defects, cancer, and cataract. The key observations are as follows:

- The low observed rate of malignant disease in prenatally exposed survivors, while surprising, can be readily reconciled with exposure to either radiation or chemical genotoxic agents.

- The most common birth defect in prenatally exposed survivors is microcephaly, often accompanied by mental retardation. The latter is strongly correlated with a history of acute radiation sickness in mothers, but very poorly with radiation dose estimates.

- Cancer incidence is significantly increased even in those survivors with very low estimated radiation exposure, and also in those who entered the inner city of Hiroshima shortly after the bombing.

- Cataract may be caused by radiation, but also by genotoxic chemicals such as sulfur mustard. Its incidence is greatest near the hypocenter; however, increased rates also occur at distances which should have been beyond the reach of radiation doses sufficient to cause cataract.

While late disease manifestations are thus not qualitatively characteristic, their spatial and temporal distribution further strengthens the case against radiation as the causative agent.

In Chapter 11, we already saw that systematic studies on diseases in long-term survivors got underway very belatedly, and also that these studies have suffered, and continue to suffer, from being burdened with fictitious estimated doses of imaginary radiation. As we will see below, many of the more useful studies are those which predate these dose estimates, and which therefore use more tangible points of reference such as symptoms of acute radiation sickness or distance from the hypocenter.

| 12.1 |

Malformations and malignant disease in prenatally exposed survivors |

The numbers of prenatally exposed survivors in Hiroshima and Nagasaki are not large, but they have been the subject of some interesting and surprising findings. It turns out, however, that none of these findings provide substantial proof for or against the thesis of this book; instead, we will here argue that the observations are compatible with either radiation or mustard gas as the causative agent. This section thus will not advance the main case of the book beyond corroborating yet again that radiation dose estimates are unreliable (see Section 12.1.4). Readers interested only in the evidence relevant to the main thesis may skip ahead to Section 12.2.

Among the effects of genotoxicity considered here, malformations are deterministic, whereas malignant disease—cancer and leukemia—is stochastic (Section 2.11.4); we should therefore expect a steep dose effect curve with the former and a shallow one with the latter. However, the susceptibility to radiation/genotoxicity of the embryo and fetus changes very substantially with time, being highest in the first trimester of the pregnancy; thus, if we lump all prenatally exposed survivors together regardless of the gestational age at exposure, we can expect the dose-effect curve to be somewhat broader than with acute radiation sickness or mortality in adults.

| 12.1.1 |

Experimental studies on teratogenesis induced by radiation and by alkylating agents |

The literature in this field is rather large; we will here only consider some selected studies. A classical study by Russell and Russell [231] examined the effects of high doses of radiation (1-4 Gy) on the development of mouse embryos, focusing on malformations of the skeletal system. Between the 6th and the 12th day of gestation, malformations were readily induced by doses of 2 Gy and centered on the bones of the trunk and the skull. Irradiation with higher doses also induced malformations in the limbs, and it extended the susceptible period beyond the 12th to the 14th gestational day.

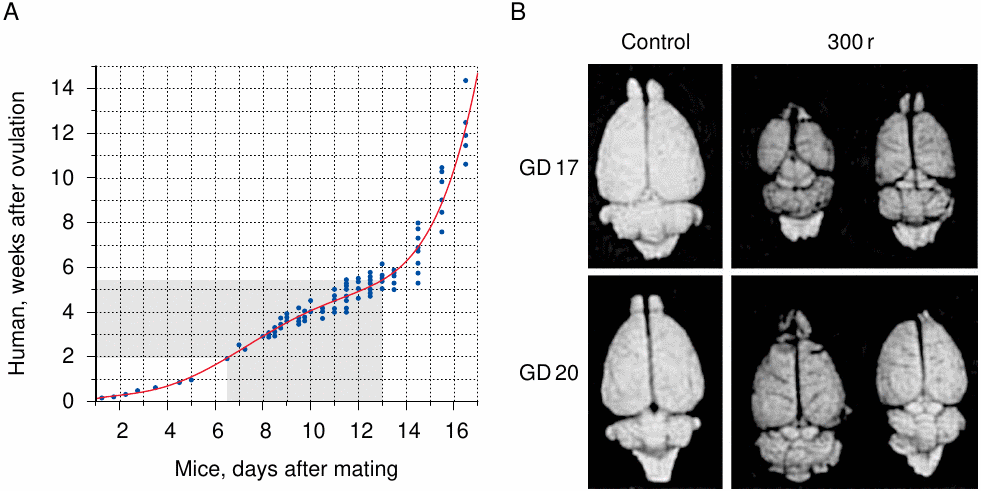

Most experimental teratogenesis studies have been carried out with mice and rats. While these two species have similar developmental schedules, the human embryo develops much more slowly; however, the developmental time tables of human and rodent embryos can nevertheless be correlated by comparing the dates at which specific developmental end points are attained (Figure 12.1A). The slope of that relation is not uniform, since, in contrast to mice and rats, whose entire pregnancy lasts only about three weeks, humans have a lengthy period of fetal growth which follows the relatively short few weeks of organ development in the embryonic stage. The organ that develops the latest and the longest is the brain, which remains susceptible to irradiation into the early fetal period. This can also be observed in rats, which show a substantial reduction in brain size after irradiation on gestational day 17, and a lesser one even on day 20, which is just two days before the end of pregnancy (Figure 12.1B). Comparison with panel A of the figure suggests that human embryos or fetuses should be susceptible to radiation-induced microcephaly at least until the 15th week, but probably beyond. This correlates well with clinical observations on children who were prenatally exposed to high doses of radiation when their mothers underwent treatment—usually for cancer—during pregnancy [234]. Among these cases, microcephaly and mental retardation occurred up to the 20th week.

The radiation doses used by Russell and Russell [231] amount to one quarter to one half of the LD50 in adult mice (see Figure 11.1). Remarkably similar findings were reported by Sanjarmoosavi et al. [235], who used sulfur mustard in rats. These authors gave an LD50 of sulfur mustard of 4.4 mg/kg, and they injected pregnant rats with either 0.75 mg/kg or 1.5 mg/kg between gestational day 11 and 14. The lower dose sufficed to induce various malformations on day 11, but no later; the higher dose evoked a similar response until day 13 but failed to do so on the 14th day. Thus, with both radiation and sulfur mustard, there is a time-dependent and fairly high threshold dose for teratogenic effects.

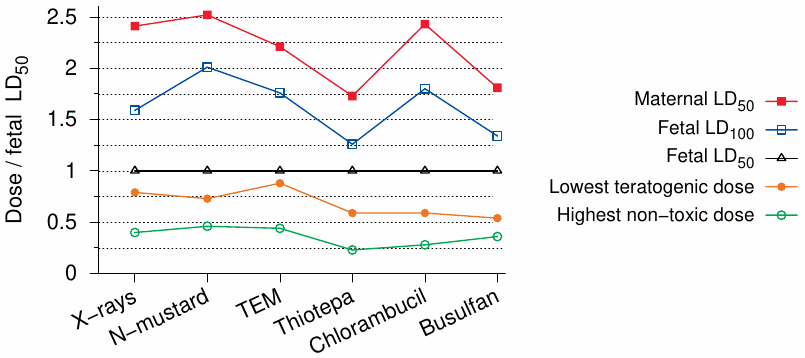

The prenatal effects of radiation and of DNA-alkylating agents were directly compared by Murphy et al. [236]. As Figure 12.2 shows, the ratios of teratogenic to toxic doses were found to be similar between X-rays and nitrogen mustard, which in turn is similar to sulfur mustard in structure and reactivity. In both cases, teratogenic doses are only slightly below the fetal LD50 and a little less than one third of the maternal LD50. Considering that the treatment in question was applied on gestational day 12, and that the teratogenic efficacy diminishes as pregnancy progresses, the minimal teratogenic dose might actually surpass the fetal LD50 in later stages.

In view of the experimental and clinical evidence discussed so far, we might expect the following observations in prenatally exposed victims and survivors in Hiroshima and Nagasaki:

- malformations or stunted organ development should be observed mostly in those exposed between the 6th and the 20th pregnancy week;

- the most commonly affected organ should be the brain;

- severe malformations in the children should correlate with maternal toxicity (acute radiation sickness);

- the incidence of outright fetal death may reach or exceed that of severe malformations.

In the next section, we will see that the pregnancy outcomes observed among the bombing victims correspond closely to these empirically based expectations.

| 12.1.2 |

Correlation of mental retardation with maternal ARS and with fetal and infant mortality |

The most frequently observed somatic aberration was indeed microcephaly, commonly defined as a head circumference that is two or more standard deviations below the average. When evaluating microcephalic survivors for mental retardation, early studies applied very stringent criteria [237]:

Mental retardation was diagnosed only if the subject was unable to perform simple calculations, to carry on a simple conversation, to care for himself, or if he was completely unmanageable, or had been institutionalized.

It seems likely that some of the microcephalic children whose condition was not quite so bad as this had some degree of mental impairment nevertheless.

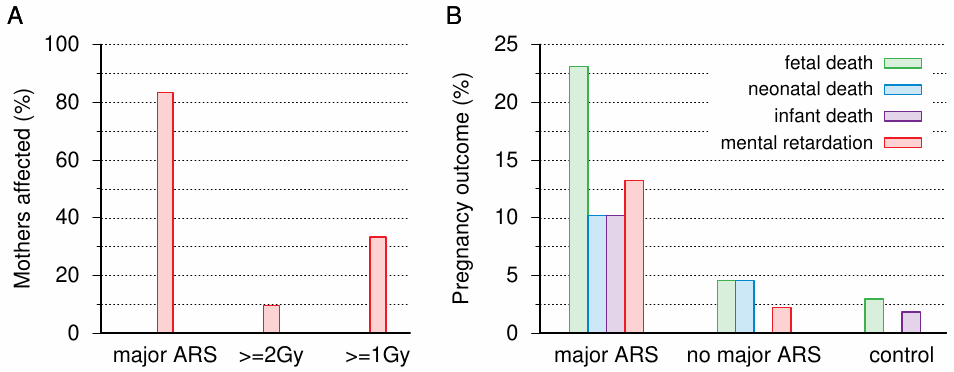

The first published reports on microcephaly with mental retardation are that by Yamazaki et al. [240], who described cases from Nagasaki, and those by Plummer [241] and Miller [239], who reported on cases from Hiroshima. Between these three studies, there are 18 children with microcephaly and mental retardation who have no other reported likely cause of retardation (e.g. Down syndrome), and for whose mothers it is known whether or not they had suffered ARS in the aftermath of the bombings (information on maternal ARS is lacking in one additional case). As it turns out, 15 out of 18 mothers had indeed suffered ‘major’ ARS symptoms, that is, one or more of epilation and purpura, and in the case of Yamazaki et al. also oropharyngeal lesions. Miller also lists several abnormalities other than microcephaly, but aside from Down syndrome, of which there are two cases, all of these occur only as single instances.

The only authors to explicitly correlate adverse pregnancy outcomes other than mental retardation with maternal ARS are Yamazaki et al. [240]. Even though their case numbers are small—their entire sample of mothers with major ARS graphed in Figure 12.3B comprised only 30 subjects—the findings are clear enough: like mental retardation, fetal, neonatal, and infant death (the latter being defined as occurring within the first year) are strongly correlated with maternal ARS. Oughterson et al. [33] give abortion rates for their samples of close to 7,000 survivors from each city. Within 1,500 m of the hypocenter, the proportion of pregnancies ending in abortion approaches 40% in Hiroshima; in Nagasaki, this value is exceeded even if all those within 3,000 m are included.149 The total number of abortions in Oughterson’s entire sample is 45, which exceeds that of mentally retarded children found in later studies on survivors.

In summary, fetal or infant death and mental retardation in surviving children are all strongly associated with acute radiation sickness in the mothers and, therefore, with exposure to a high level of radiation or chemical genotoxicity.

| 12.1.3 |

Mental retardation and time of exposure |

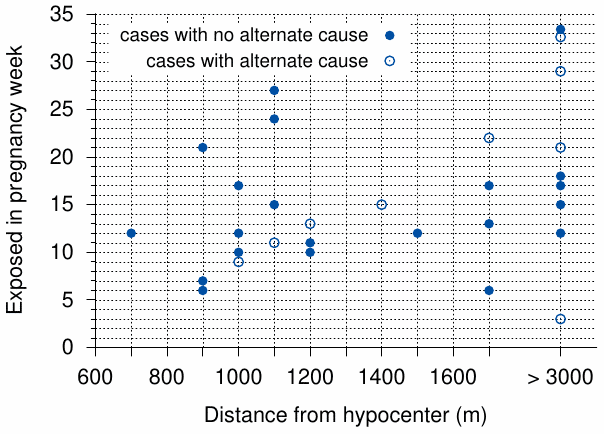

A later study by Wood et al. [242] reports 30 prenatally exposed victims with mental retardation. Nine of these 30 cases are ambiguous, since the children have additional conditions—chromosome aberrations, or histories of brain infections or perinatal complications—that might well account for the observed mental deficit. The number of 21 cases without such ambiguity is slightly higher than the 18 such cases reported in earlier studies (see above). Figure 12.4 shows the putative week of gestation at the exposure for each of Wood’s 30 cases, as well as the mother’s distance from the hypocenter.150 With the exception of one earlier, ambiguous case, mental retardation begins with the 6th week of gestation. The average gestational age of all cases is 14 weeks when the ambiguous cases are omitted, and 15 weeks when they are included. Some cases arise after the 20th week; the single very late case, exposed beyond 3000 m from the hypocenter, was likely not caused by the bombing.

We had seen earlier that symptoms of ARS were observed in some late entrants to the city center in Hiroshima (Section 8.7); major ARS symptoms were apparent in some individuals who first entered the inner city up to two weeks after the bombing. Moreover, we had noted that such delayed exposure could account for cases of ARS that became manifest unusually late (see Section 8.8). If postponed exposure could induce mental retardation in unborn children also, we might expect that the apparent gestational age of these children—namely, that at the time of the bombing, rather than at the actual exposure—should be reduced in keeping with the time delay of exposure. However, no such trend is apparent in Figure 12.4 among those who had been more than 3000 m removed from the hypocenter during the bombing, and who would be the most likely to have been exposed only afterwards. On the other hand, out of the five cases in this group that are unambiguous, four still cluster around the 15th pregnancy week, suggesting that they, too, were caused by exposure during the bombing or only a short time thereafter.

Overall, we can conclude that the timing of mental retardation induced by prenatal exposure agrees well with expectations based on experimental studies and on prior observations on the children of mothers who had received radiation treatment during pregnancy.

| 12.1.4 |

Mental retardation and radiation dose estimates |

Considering that both experimental studies and observations on the bombing victims clearly indicate that mental retardation results only with high levels of exposure, it is of considerable interest to compare this clinical outcome to estimated radiation doses. If the dose estimates were realistic, most mothers of retarded children should have high dose estimates; this is, however, not observed. According to Otake and Schull [238], only about 10% of the mothers have estimated doses of ≥ 2 Gy, and only about 32% reach or exceed 1 Gy (Figure 12.3).151 Another oddity of Otake and Schull’s study is the discrepancy between the two cities—27% of those exposed to 0.5-1 Gy in Hiroshima, but 0% of those so exposed in Nagasaki, were mentally retarded. (The numbers are close to 37% for expecting mothers exposed at > 1 Gy in both cities.)152

Blot [243] as well as Miller and Mulvihill [244] report that microcephaly, with or without accompanying mental retardation, is significantly increased already at estimated doses below 0.2 Gy, and very strongly at levels between 0.2 and 0.3 Gy. Considering the evidence from animal experiments, this simply is not plausible.153 Overall, the poor correlation between dose estimates and clinical outcomes that we noted with ARS in Section 11.3 also applies to microcephaly with mental retardation in prenatally exposed children.

| 12.1.5 |

Cancer and leukemia in prenatally exposed survivors |

A major discovery in radiation biology and medicine, and one which was initially greeted with much skepticism, was that prenatal exposure to even the small doses of radiation which are used in X-ray diagnostics will cause a measurable increase in the incidence of childhood cancer and leukemia. First reported in 1956 by Stewart et al. [246],154 this finding was later confirmed in two independent large-scale studies in the UK [247] and the U.S. [248]. While the exact magnitude of the risk remains under debate, it is generally believed to be at least as high as in the first decade after birth, which is the most sensitive period of extra-uterine life [249].

Against this background, it is certainly surprising to learn that only one case of cancer, and no cases of leukemia, occurred during the first ten years among the prenatally exposed in Hiroshima and Nagasaki [250,251], even though a considerable number of leukemias did occur among those who had been exposed as young children. Using the then current estimate of the cancer risk per dose of radiation [247] and the survivors’ estimated radiation doses, Jablon and Kato [250] calculated that approximately 37 of those prenatally exposed should have been afflicted by cancer or leukemia, and they suggested that the cancer risk of prenatal radiation exposure must be far lower than assumed.

A lot of ink has since been spilled over the question whether the discrepancy between observed and expected incidences is statistically robust. Since Jablon and Kato’s expected cancer rate is based on the very same estimated radiation doses which were already shown to be unreliable (see above and Chapter 11), there is no point in joining that argument. Rather than explaining away Jablon and Kato’s findings, as some have tried, with statistical contortions, we will consider instead if they can be properly understood in a scientific context.

We might start from the assumption that the toxic principle was not radiation, but rather a chemical poison. Drugs and poisons which are present in the maternal circulation differ considerably in their ability to traverse the placenta and reach the unborn child. This is well illustrated in an experimental study by van Calsteren et al. [252]: among six different anticancer drugs examined, the fetal plasma levels ranged from 0% to 57% of the maternal ones. Thus, in principle, the embryo and fetus may be protected from a drug or poison that harms the mother, while no such protection is possible with γ- or neutron radiation. However, this line of reasoning fails with the poison used in Hiroshima and Nagasaki, since the observed teratogenic effect (see Section 12.1.2) indicates efficient traversal of the placenta. Evidently, the poison affected the unborn children to a similar extent as radiation would have, yet it induced only a very small number of malignancies.155 Thus, we clearly must reexamine the assumption of high prenatal susceptibility to cancer induction by radiation or other mutagenic stimuli.

Anderson et al. [254] reviewed a number of experimental studies that compare the effects of X- or γ-rays and of various chemical carcinogens before and after birth. The chemicals were not similar to sulfur mustard, and they might undergo metabolic activation or inactivation before and after birth to different degrees; therefore, we will here only consider the radiation studies from that review. Among these, the majority find greater carcinogenic potential after birth than before, but exceptions are observed. In a particularly comprehensive study by Sasaki [255], mice were irradiated at various times before or after birth, then allowed to live out their lives until their natural death, and finally autopsied. Interestingly, the most sensitive time for cancer induction was tissue-dependent; among 9 different types of tumors, 7 were induced by radiation more readily after birth than before it, whereas the reverse was true for the other two.

Cancers and leukemias are very often accompanied (and sometimes caused) by chromosome aberrations. We had seen in Section 11.4.1 that somatic chromosome aberrations can persist for a very long time. Interestingly, however, they may be eliminated rather quickly after fetal exposure to alkylating agents [256] or to radiation [257]; this apparently applies to lymphocytes but not epithelial cells [258]. Low rates of chromosome aberrations were also observed in the lymphocytes of prenatally exposed bombing survivors, even if their mothers had high rates of persistent aberrations [259]. Lymphatic leukemia—that is, leukemia originating from precursor cells of lymphocytes—is the single most common childhood malignancy in general, and it also was the most common one among children postnatally exposed in Hiroshima and Nagasaki. The mechanism by which the fetus eliminates chromosome anomalies from lymphocytes, and presumably also from their precursor cells, remains to be elucidated; but the effect as such is clear enough, and it may well account for Jablon and Kato’s remarkable observation that childhood leukemias were absent from prenatally exposed bombing survivors.

Surprising as this evidence may be, it does not distinguish radiation from radiomimetic compounds such as sulfur mustard as the genotoxic agent used in Hiroshima and Nagasaki. It also does not rule out the induction of childhood cancers—in small numbers, and thus detectable only in samples much larger than those of the bombing survivors—by medical X-ray exposure. In this context, the collective evidence simply indicates that we should not linearly extrapolate from low doses to very high ones or vice versa.

| 12.2 |

Cancer and leukemia |

The literature on the incidence of cancers and leukemias among the bombing survivors in Hiroshima and Nagasaki is quite large. Many of the reported findings fit equally well with either radiation or radiomimetic chemicals as the underlying cause. We will here not attempt to review the entire field; instead, we will focus on a small number of studies that do provide some clues as to the true cause of these cases.

| 12.2.1 |

Correlation of death due to cancer and leukemia with acute radiation sickness and burns |

While many early studies correlated cancer incidence to distance from the hypocenter, virtually all recent ones use radiation dose estimates as the explanatory variable. As we have seen, however, the radiation dose estimates are fairly loosely correlated with biological outcomes such as acute radiation sickness and somatic chromosome aberrations (Sections 11.3-11.5). Therefore, we might ask if those biological outcomes themselves might be more suitable as predictors of cancer risk than the radiation dose estimates.

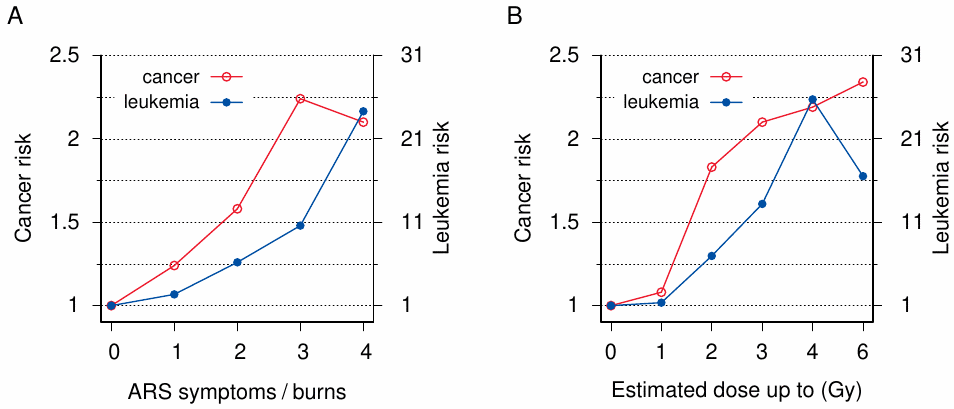

Chromosome aberrations have apparently been studied only in a fairly limited number of survivors, and moreover there seems to be no dataset that would allow one to correlate them to cancer incidence. However, the fairly large data set which we used in Section 11.3 to correlate radiation doses with acute radiation sickness [168] also contains cancer and leukemia mortality data. We can therefore examine to what degree ARS symptoms predict cancer risk.

The result is depicted in Figure 12.5A. The risk of both cancer and leukemia clearly increases with the number of ARS symptoms. Interestingly, burns, when present, also increase the cancer risk, even though their specific contribution to the total risk is somewhat lower than that of each single ARS symptom. Figure 12.5B shows the correlation of cancer and leukemia risk with radiation dose estimates, as determined from the same data set. Considering our earlier observation that these estimates are not very good at predicting ARS symptoms, the high degree of correlation evident in this figure may be surprising. We will examine this question in the next section; for now, we will focus on ARS symptoms and burns.

Given that ARS symptoms are caused by the genotoxic effects of radiation or of radiomimetic chemicals, their correlation with the risk of cancer and leukemia is expected. In contrast, the association of cancer risk with burns is surprising. A trivial explanation of this correlation might be that burns are simply a secondary indicator of exposure to radiation or to poison. Burns are indeed highly correlated with ARS and with radiation dose estimates (not shown). However, even if we consider only those survivors who have no ARS symptoms and/or have dose estimates of less than 5 mGy, some risk associated specifically with burns remains (Table 12.1). Among survivors without ARS, the cancer mortality observed with burns but minimal radiation dose is exceeded by those without burns only at estimated doses of 1 Gy and beyond. Thus, burns as the only documented indicator of exposure appear to carry an increased cancer risk. Note, however, that survivors with burns are older by about three years on average than those without; this age difference may contribute to their increased risk of cancer.156

| Sample | Burns | Subjects | Person-years | Age | Cancer | Incidence | Risk |

| no ARS | − | 63,072 | 1,850,801 | 27.8 | 4,729 | 2.56 | |

| + | 4,059 | 117,960 | 29.2 | 385 | 3.26 | 1.28 | |

| no radiation | − | 31,580 | 927,705 | 27.9 | 2,285 | 2.46 | |

| + | 908 | 25,783 | 31.0 | 90 | 3.49 | 1.42 | |

| neither | − | 31,138 | 914,522 | 27.8 | 2,253 | 2.46 | |

| + | 835 | 23,660 | 30.8 | 84 | 3.55 | 1.44 |

While thermal burns might occasionally cause skin cancer in the long term, the great majority of cancers in this statistic concerns internal organs; thus, the commonly imputed trauma mechanism (‘flash burn’) does not explain the documented cancer risk, which therefore provides yet another piece of evidence against the official story of the nuclear detonation. More interesting than this conclusion, though, are the implications for the alternate scenario developed in this book.

We had noted in Section 9.4 that studies on napalm injury are extremely scarce in the medical literature, and I am not aware of any statistics on cancer incidence in napalm victims. However, as with other thermal burns, it is not biologically plausible that napalm burns should increase the cancer risk of interior organs. In contrast, an elevated general risk of cancer would be expected after exposure to genotoxic agents such as sulfur mustard. Thus, the cancer risk associated with burns strengthens our previous conclusion that a substantial fraction of the reported burns were indeed chemical burns due to mustard gas (see Section 9.5).

| 12.2.2 |

Cancer rates at low radiation doses |

We saw before that estimated radiation doses don’t predict ARS symptoms particularly well (Figure 11.1), but on the other hand that the cancer risk indeed correlates with radiation doses (Figure 12.5B). Can we reconcile these two observations?

As noted in Section 2.11.4, ARS is a deterministic radiation effect, whereas cancer is a stochastic one. Thus, with cancer, all we can ask is whether the incidence in large samples increases with the radiation dose, which is indeed the case. On the other hand, with acute radiation sickness, such a correlation of averages is not enough; instead, the presence or absence of ARS in small samples or even every single survivor should exhibit a plausible relationship to the estimated dose; there should be at most a very small number of outliers, which might arise for example from clerical errors in dose assignment or clinical history-taking. As we had seen before, this is clearly not observed.

If we consider an arbitrary dose interval—say, from 2 to 3 Gy—we can assert that, in the calculation of the cancer incidence in this dose range, spillover from the adjacent dose ranges on both sides will at least partially cancel out: subjects who were assigned to this interval but really received a dose above 3 Gy will contribute some surplus cancer cases, which will be balanced by lower cancer case numbers among those subjects included in the interval that really received below 2 Gy. However, such mutual compensation will not occur at the edges of the entire dose range. While the upper edge is but sparsely populated, the sample size near the lower edge is very large. Thus, the cancer incidence at the low end of the dose range should tell us something about the accuracy of dose assignment. If estimated doses were accurate, then the cancer incidence among survivors with very low estimates should be essentially the same as in unexposed control subjects; on the other hand, if a significant number of survivors with low estimates really received higher doses, then there should be surplus cancer incidence within this group.

Readers will be familiar with the general idea of control groups, and they will appreciate that control groups should, as far as possible, be unexposed to the agent or stimulus under study. They may therefore be surprised to learn that ABCC/RERF’s long-term studies have used, and continue to use, control groups from within the two cities, consisting of survivors who were deemed to have been outside the reach of bomb radiation. The practice has been criticized vigorously and repeatedly [159,260,261], but RERF has not paid heed to these well-founded objections.

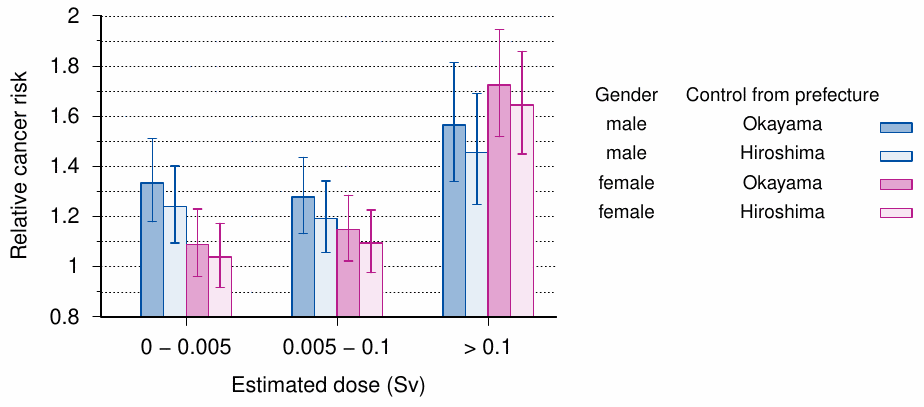

When one compares the exposed to the exposed, there is of course no chance—and no risk—to discover anything amiss. There exists, however, one study that has compared the cancer risks of Hiroshima survivors with low estimated doses to a proper control group from outside the city. In this study [262], the cancer incidence in subjects from Hiroshima was matched to that in two different control groups, namely, the entire population of Hiroshima prefecture and that of the adjoining Okayama prefecture. The former contains the city of Hiroshima, which accounts for a sizable minority of its entire population; however, within the population of Okayama prefecture, the number of bombing survivors should be negligible.

The results of the study are summarized in Figure 12.6. In the comparison of Hiroshima survivors with both control groups, precautions were taken to correct for differences in gender and age, both of which will have a strong effect on cancer incidence. The survivors were divided into three dose groups; the lowest dose group, with estimated doses of 0-5 mSv, is the very same one which RERF routinely misuses as ‘negative controls’. The cancer risk relative to the two control populations is clearly and significantly increased in men, and slightly but not significantly in women.

The upper bound (0.1 Sv) of the second dose range is still fairly low; it is thus not too surprising that the cancer risk changes little relative to the lowest dose group, but in women the risk trends slightly higher. A very large and unambiguous increase in cancer risk, which is now greater in women than in men, is seen in the highest dose group. In each case, the risk is higher relative to Okayama prefecture than to Hiroshima prefecture. The most straightforward explanation for this difference is that the Hiroshima prefecture control group only ‘dilutes’ the bombing survivors, but does not entirely exclude them. The population of Okayama prefecture can be considered unaffected by this problem, and it therefore constitutes the more appropriate control group.

The difference in cancer risk between men and women, particularly in the lowest dose group, is interesting. Watanabe et al. [262] comment as follows:

Confounding factors, such as smoking and drinking alcohol, may also affect the distribution, but there were also more males than females involved in the rescue efforts subsequent to the bombing, and these males may therefore have been active in areas with residual radiation.

Could the elevated cancer risk in the survivors indeed be due to heavier drinking or smoking among survivors than control subjects? Smoking promotes cancer of the lungs more strongly than that of any other organ; however, among the male survivors in every dose category, the relative excess risk of lung cancer was below the average of all cancers (but it was above the average excess cancer risk among women in the middle and high dose groups). Similarly, alcohol should have preferentially increased the relative excess risk of gastric cancer, but this number was indeed below the total relative excess cancer risk in both genders and within all dose groups. Thus, at least in men, whose overall excess cancer risk in the low and middle dose category is most in need of an explanation, there is no indication at all that smoking or drinking is the cause.

This leaves us with the second proposed interpretation—namely, that men preferably participated in rescue and recovery after the bombing, during which they were exposed to residual radiation. Watanabe et al. adopt this view:

It cannot be denied that even survivors in the very low [dose] category may have been subject to additional radioactive fallout and may have breathed in or swallowed induced radioactive substances in the vicinity of the hypocenter.

The assumption that excess morbidity in men was caused by prolonged exposure near the hypocenter agrees with anecdotal evidence: multiple child survivors quoted by [14] relate that their fathers stayed behind in Hiroshima, sometimes falling ill from ARS, while mothers and children found refuge outside the city. If delayed exposure were indeed a major factor, the risk in male survivors should be age-dependent, since boys younger than 12 years or so were likely not called up to join in the rescue effort, and they should thus have a lower cancer risk than those who were 16 years or older. Watanabe’s study does not, however, break down the cancer risk by age.157

Reacting to Watanabe’s findings, scientists from RERF issued a papal bull entitled “Radiation unlikely to be responsible for high cancer rates among distal Hiroshima A-bomb survivors” [230] that dismisses them as ‘implausible’, insisting that (1) the risk should really have been higher in women than in men, and (2) that Watanabe’s observed risk was altogether too large. Their first assertion was based on RERF’s own studies, which, as we have already discussed, relied on phony dose estimates and improper control groups. The second claim was supported by the conventional wisdom that bomb radiation was short-lived, and fallout was small—thus, there was no possible source of radiation, and Watanabe’s findings must therefore be spurious. What better explanation did RERF have to offer? You guessed it—smoking.

While we agree with RERF that there is indeed no plausible source of residual radiation which could account for the substantially increased cancer risk among the male survivors in the low-dose group, we certainly don’t accept their conclusion that Watanabe’s findings must therefore be spurious. Instead, we will next examine Watanabe’s suggestion of an increased cancer risk among those who joined the cleanup effort in the inner city.

| 12.2.3 |

Cancer and leukemia in early entrants to Hiroshima |

If staying behind in the city after the bombing increased the risk of cancer, then some increased risk should also be observed in those who entered the city only after the bombing. This is indeed the case. A review by Watanabe [155] documents a strikingly increased risk of leukemia in those who entered the city within the first three days of the bombing, relative to those who entered the city subsequently (Table 12.2). Entry between days 4 and 7 still seems to carry a slightly elevated risk when compared to later entry, but this difference is not statistically significant.

| Time of entry (days) | |||

| ≤ 3 | 4–7 | 8–14 | |

| Population | 25,799 | 11,001 | 7,326 |

| Number of cases | 62 | 9 | 4 |

| Incidence/105/year | 8.90 | 3.03 | 2.02 |

The same author also reported an increased incidence of thyroid cancer among those who entered Hiroshima within 7 days of the bombing, and who were diagnosed between 1951 and 1968 in the surgical department of Hiroshima University Hospital [155, p. 519]. The incidence of thyroid cancer in this group was similar to that among the directly exposed. However, the overall number of cases in this sample of early entrants was small (9), which limits the statistical power of this study.

Watanabe summarizes one additional Japanese study on thyroid cancer with similar findings. Furthermore, he reports that bronchial carcinoma, too, was notably increased among early entrants, but as with thyroid carcinoma the overall number of observed cases was low. Watanabe also surveys cancers of several other organs, but here he does not consider early entrants separately from the directly exposed.

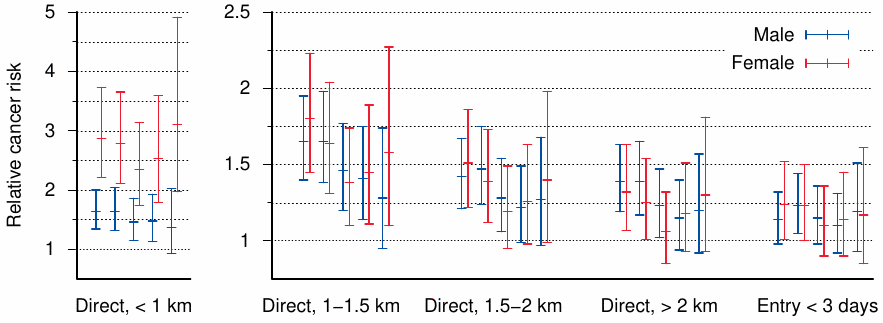

A more recent study on cancer in early entrants was reported by Matsuura et al. [263]. This investigation included almost 50,000 subjects who entered the city of Hiroshima in the first 20 days after the bombing; and out of these, 36,000 entered it already between August 6th and 8th. The authors define ‘the city’ as ‘the region within about 2 km of the hypocenter’. Their most important results are summarized in Figure 12.7.

Before discussing the significance of this study, one word about its methodology is in order. The authors used a Cox proportional hazard model, which might more intuitively be called a ‘risk factor model’: if multiple determinants affect the risk, it is assumed that each can be represented by a constant risk factor, to be determined by a global numerical fit, and that the overall risk for a given individual can then be obtained by multiplying all the specific risk factors that apply to it. For example, the data in Figure 12.7 include some individuals who were exposed directly at > 2 km from the hypocenter and also came to within < 2 km of it during the first 3 days. The cancer risk of these subjects would be estimated by multiplying the two corresponding risk factors, both of which are close to 1.2. Other influences such as sex and age can be accounted and corrected for by assigning them their own risk factors.

Matsuura et al. focused on the years 1968-1982, because complete records were available to them for those years. Figure 12.7 shows the relative risk of death due to cancer in five sub-populations, defined by different starting dates that ranged from January 1968 to January 1980. With each group, the period of observation, during which cancer deaths were counted, began with the respective starting date and ended on December 31st of 1982. They defined these groups as follows:

Each sub-population included subjects who had already been recognized as survivors before the defined starting date and excluded those who had died or had not been recognized as survivors before this starting date. For example, an individual who had been recognized as a survivor before January 1, 1968, and had lived in Hiroshima Prefecture until December 31, 1982, was included in all the sub-populations.

We find that the highest relative cancer risk occurs among those survivors who were within 1 km of the hypocenter at the time of the bombing. While this would be expected, there is a surprise—the risk is almost twice higher in women than in men. Given that in all other exposure groups the risk is similar between both genders, I cannot think of a plausible explanation for the large gender difference in this one exposure group.

The group—or, strictly speaking, the risk factor—we are most interested in is that of entry within 3 days of the bombing into the inner city. This risk hovers near 1.2 for each of the five starting dates reported by the authors. The lower bound of the 95% confidence interval dips slightly below 1.0 for most data points, which means that the elevated risk is not statistically significant at the corresponding level.158 To put this into perspective, we must consider the following points:

- In all five exposure groups, the confidence intervals are smaller with observation periods that started earlier, and therefore lasted longer. This is of course expected, since the numbers of cancer deaths counted will be higher in this case. Had suitable data been available for the period of time before 1968, the increased risk among the early entrants would very likely have been statistically significant also.

- Matsuura et al. include among the early entrants all survivors who came within 2 km of the hypocenter. Most other studies on early entry, for example Sutou [34], use a smaller radius; the large radius used here will tend to ‘dilute’ the cancer risk.

- The comparison group are those who entered later than 3 days after the bombing, presumably for want of a control group without any history of exposure at all. Had a proper control group been available, the incremental cancer risk in early entrants would likely have been greater.

The authors report that, within the limited data available for this study, statistical significance is attained when the period of early entry is limited to only the 6th of August; the incremental risk due to entry on this day is clearly higher than with the three subsequent days. Overall, we concur with Matsuura et al. [263] that their findings demonstrate a moderate but definite incremental risk of cancer in those who came within a radius of 2 km of the hypocenter very shortly after the Hiroshima bombing. This corresponds with similar findings pertaining to acute radiation sickness, which were discussed earlier in Section 8.7. To explain their finding, Matsuura et al. suggest that early entrants were exposed to fallout or induced radioactivity:

There has been little research regarding internal exposure due to intake of food and water contaminated by radiation … it is important to determine conclusively whether the differences among entrants in mortality risk are due to residual radiation.

The elevated cancer risk evident in those with low dose estimates (Section 12.2.2) as well as in early entrants indicates indeed that a resident carcinogenic agent was present in the city for some time after the bombing; but for the various reasons detailed before, we maintain that this agent was sulfur mustard rather than radioactivity.

| 12.2.4 |

Distribution of cancer risk about the hypocenter |

With a proper nuclear bomb, the intensity of radiation should have been highest at the hypocenter and then decreased outward from it in a regular, rotationally symmetrical fashion. The same should therefore be expected of the cancer risk. However, two studies on this question have not found this rotational symmetry at Hiroshima [160,161]. Figure 12.8 illustrates the findings from one of them. The contour lines of equal relative tumor risk are not round, and the distribution of the risk appears centered some 300 m to the west of the hypocenter. At an angle of 178° and a distance of 2 km, the cancer risk equals that at 62° but only 1.2 km out. This may have been due to the wind, which on the day of the bombing is said to have blown in a westerly direction [160], or possibly also to the limited aiming accuracy of the bombing.

| 12.2.5 |

Incidence of cancer in specific organs |

To further examine whether radiation or mustard gas is the more likely cause of cancer among the bombing victims, we might ask whether the distribution of malignancies among different organs is similar between survivors and known radiation exposures. This turns out to be unprofitable, however, since one cannot find suitable groups for comparison.

Most uses of medical irradiation, both diagnostic and therapeutic, are limited to certain body parts. This uneven exposure makes comparison of cancer incidence between organs largely meaningless. Total body irradiation has been used as a conditioning procedure for bone marrow transplants. However, in the apparently only large group of such patients to have been surveyed for secondary malignancies [264], conditioning by irradiation had always been used in combination with cytotoxic drugs, which will also contribute to the risk. Moreover, the underlying disease, as well as immunological complications after the bone marrow transplant procedure, may further skew the organ distribution of secondary cancer. Whole-body exposure to high doses of radiation only, without any confounding treatment, has occurred in some nuclear accidents, but case numbers are far too small for any sort of meaningful statistics.

Readers interested in the question of organ-specific radiation-induced cancer risk may consult two older studies by the United Nations [265] and the National Academy of Sciences [210], which cover it in greater detail than more recent reports issued by the same organizations. We will here forgo a systematic discussion of every organ and instead only give two examples to illustrate that the evidence is indeed inconclusive.

| 12.2.5.1 |

Lung cancer |

Considering the prominent affliction of the lungs and airways in bombing victims who were killed outright or made acutely ill, we might expect the incidence of lung cancer to be more prominently elevated among survivors than that of other cancers. There are indeed some studies to suggest that this is the case; for example, Ishikawa et al. [8, p. 286] summarize an early study from Hiroshima that finds the relative risk of lung cancer to be higher than that of the four other organs listed (breast, stomach, ovary, and cervix uteri). However, case numbers in this study are very small. As noted above, the study by Watanabe et al. [262] found the relative excess risk of lung cancer to be lower than the average of all cancers in men, while it was somewhat above the average in two out of three dose groups in women. Thus, there is no strong evidence of a preferential affliction of the lungs.

If indeed the lungs are not preferentially afflicted by cancer, should we consider this evidence against the use of sulfur mustard in the bombings? Lung cancer rates were indeed very greatly increased among former workers of the Okunoshima mustard gas factory [266]. However, most of these workers had been exposed to the poison continuously for more than five years, and some for more than ten, whereas the bombing survivors suffered only a single acute exposure. More suitable for comparison are veterans who were exposed to the gas in battle. Studies on British [267] and Iranian [268] veterans found only slightly and non-significantly increased rates of lung cancer among them. Overall, therefore, the available data don’t provide conclusive evidence for or against the use of sulfur mustard in the bombings.159

| 12.2.5.2 |

Thyroid cancer |

Among the bombing survivors, the highest relative risk of any solid cancer pertains to the thyroid; it is only exceeded by the risk of leukemia. Parker et al. [127] reported a relative risk of 9.4 in men and 5.0 in women, respectively, but each value has a very large associated 95% confidence interval. Schmitz-Feuerhake [260] suggested that this high cancer incidence was caused by uptake of 131I, a short-lived radioactive iodine isotope that is formed by 235U or 239Pu fission, and which indeed produced thyroid cancer in the general population near Chernobyl after the reactor meltdown in that city [269,270]. This explanation would, of course, require significant exposure to fallout from the nuclear detonation, which is ruled out by the findings presented in Chapter 3.

Thyroid cancer is also readily induced by radiation from sources other than incorporated radioactive iodine [265, p. 226]. On the other hand, in Section 7.2.4, we suggested that metabolic activation of sulfur mustard in the thyroid gland might cause preferential carcinogenesis in this organ. Zojaji et al. [126] reported two cases of thyroid cancer among 43 Iranian veterans who had been exposed to the poison.

Overall, therefore, the high incidence of thyroid cancer seems compatible with both nuclear radiation and sulfur mustard as the underlying cause. These two examples may suffice to illustrate that this line of inquiry holds little promise for the purpose of this study.

| 12.3 |

Long-term disease other than cancer |

With these diseases, the situation is similar as noted above with cancers of specific organs: the available information is mostly of low resolution and on the whole does not provide unequivocal evidence for or against the thesis of this book. We will again only discuss selected examples.

| 12.3.1 |

Cardiovascular and respiratory disease |

The functions of the heart and of the lungs are closely linked, and disease in one will often affect the other also. If pulmonary gas exchange and blood flow are restricted by some chronic lung disease, the right heart, which pumps the blood into the lungs, will show characteristic signs of strain and may eventually give out. Disease of the left heart, which receives blood from the lungs and pumps it into the general circulation, will cause blood to back up into the lungs. This can result in acute lung edema; a lesser degree of lung edema will promote pneumonia. From these considerations, we can draw the following conclusions: (1)a diagnosis of pneumonia or heart failure on a death certificate does not tell us whether the organ in question really was the primary focus of disease; and (2)broad diagnostic categories such as ‘cardiovascular disease’ or ‘non-cancer lung disease’ do not provide sufficient information to reason about disease mechanisms.

As it turns out, most of the information contained in long-term studies on survivors is precisely of this fragmentary sort. We can only say that the incidence of ‘cardiovascular disease’ and of ‘non-cancer lung disease’ are somewhat elevated in the bombing survivors; should readers still put stock in the radiation dose estimates, they will be pleased that numbers for the excess risk per Gray are readily available [271–274]. Cardiovascular disease was also found more prevalent among American radiologists [275] and in Iranian veterans exposed to mustard gas [276]. The latter study catalogs a number of specific diagnostic findings, from which it concludes:

Induction of cardiomyopathy, reduction in left ventricular ejection fraction and loosening of right ventricle appear to be the most important latent complications of sulphur mustard exposure.

This statement suggests both direct toxic action on the heart and indirect effects of lung disease. The diagnosis and treatment of some Iranian veterans with particularly severe chronic obstructive lung disease is described by Freitag et al. [186]. The latter study also well illustrates that a small number of competently and thoroughly documented disease cases can be much more instructive than large-scale statistics that employ very broad and generic categories. Unfortunately, no individual case descriptions of cardiovascular or pulmonary disease among the Japanese bombing survivors are available.

The thesis of this book implies that lung damage similar in nature and in severity to Freitag’s cases should also have occurred in the ‘nuclear’ bombings. With the poor level of medical care available to the survivors, it seems likely that many such cases would have taken a fatal course even before 1950, that is, during the time period for which not even retrospective health statistics are available. Overall, therefore, we can only state that the very limited information on these victims appears compatible with either radiation or mustard gas as the primary causative agent.

| 12.3.2 |

Cataract |

Cataract is a clouding of the normally transparent lens of the eye. It can occur spontaneously, mostly in old age, but it can also arise in response to specific pathogenic stimuli. The most common one of these is the elevated blood glucose level in diabetic patients; a similar disease mechanism operates in galactosemia, which is a rare disease. Both ionizing radiation [277] and cytotoxic anticancer drugs [138,278] can produce cataract as well. The previously cited study on American World War II veterans who were misused as guinea pigs for studies on sulfur mustard and lewisite states that 50 out of 257 respondents of a health survey report cataracts or other eye problems; the exact proportion of cataracts within this number is not stated [21, pp. 384-5].

Radiation cataract has been the subject of numerous experimental and clinical studies. In assessing them, we need to be clear about what exactly constitutes cataract. While in common usage the diagnosis implies that lens obfuscation is so severe as to cause manifest impairment of visual acuity, most statistical and experimental studies count as cataract any turbidities that can be observed using opthalmological instruments, even if vision is not affected.

The dose-response relationship assumed for radiation cataract has seen some revisions over time. Merriam and Focht [279], who examined a series of 73 patients, found a threshold dose for single exposure of 200 r (approximately 2 Gy); if radiation was administered in multiple sessions, the threshold dose was higher. A complicating factor is that cataract, once initiated, can slowly progress over time, so that the degree of severity and the threshold dose will be influenced by the time span of observation. The cited study had an average follow-up period of 8 years after irradiation.

A study on patients who had received radiation therapy as infants, and who were examined 30-45 years after this treatment, was reported by Wilde and Sjöstrand [280]. These authors found minor but consistently observable turbidities in eyes exposed to as little as 0.1 Gy. They ascribe this very low threshold to a higher sensitivity of the infant’s lens. They do report a fairly clear and uniform progression of cataract severity with radiation dose.

Since radiation cataracts were expected in atomic bomb survivors, they were frequently examined for this condition. Flick [193] was looking for it as early as 1945, although he was soon persuaded by the evidence to shift his focus to retinal hemorrhages instead (see Section 10.2.3). A small series of clinically manifest cases was reported by Cogan et al. [281]. While several large-scale studies report fairly high incidences of cataract, only a small fraction of these patients have clinically deteriorated vision.

| Distance (km) | Examined cases | Cataracts | |

| n | % | ||

| Under 2 | 159 | 87 | 54.7 |

| 2-3 | 126 | 25 | 19.8 |

| 3-4 | 126 | 4 | 3.2 |

| over 4 | 25 | 1 | 4.0 |

| Totala | 436 (435) | 117 (116) | |

| aNumbers in brackets are given in the original [282]. | |||

As expected, more recent studies use radiation dose estimates as the explanatory variable, and they find an increased risk of clinically manifest cataract requiring surgery at doses below 0.5 Gy [283]. This does not agree with clinical studies that evaluate actual radiation and is most likely due to erroneous dose assignment.160 An older study that predates the radiation dose estimates and therefore uses distance from the hypocenter as its covariate is that by Kandori and Masuda [282]. The results of this study, which reports on survivors from Hiroshima only, are shown in Table 12.3. As expected, the incidence of cataract decreases with distance from the hypocenter. However, an appreciably increased number is found beyond a distance of 2 km, at which radiation doses should have been too low for inducing cataract in all but the youngest subjects (but the authors do not state that all of those affected beyond 2 km had been young). This pattern of incidence falling off more slowly than presumed radiation dose resembles that found earlier with acute radiation sickness (see Section 8.4).

| 12.4 |

Conclusion |

The diseases considered in this chapter add to the evidence against the nuclear bombings more due to their temporal and spatial distribution than by virtue of their specific clinical manifestations, on which there simply is too little information in the published literature. It seems likely that such information is kept under lock and key in the archives at RERF.

With this chapter we finish our inquiry into the medical observations in Hiroshima and Nagasaki. We conclude that even on their own, without recourse to the physical studies discussed in the first part of this book, the medical arguments alone suffice to unambiguously reject the story of the atomic bombs. At the same time, they provide strong support for mustard gas and napalm as essential components of the massacre.