On various blogs and websites dedicated to science and politics, usually of conservative persuasion, it is often alleged that the ban of the insecticide DDT, motivated by concerns about its damaging effects on the environment, has caused tens of millions in avoidable malaria fatalities over the last couple of decades. This position is untenable in light of the following scientific and historic facts:

- DDT is banned internationally for use in agriculture, but its use in malaria control remains permitted under the regulations of the Stockholm Convention. The production of DDT and its use in malaria control have never been discontinued.

- While DDT is cheaper than most other insecticides, cost of manufacture has risen in proportion to that of petroleum, the major required raw material. Moreover, like other insecticides, DDT selects for resistance in the targeted insect vectors. Rising cost and widespread resistance, not regulation, are the key reasons for the limited and declining worldwide use of DDT.

- Most malaria fatalities occur in Africa. On this continent, no comprehensive effort has ever been made to control or eradicate malaria; instead, all such projects occurred only on a local or regional scale, and many were abandoned after only a few years.

- In the most severely affected parts of the world, only a small fraction of malaria cases are actually seen by health care workers or recorded by health authorities. Regardless of the tools employed, effective malaria control is impossible with such inadequate levels of organization and preparedness.

Malaria remains rampant because control efforts lack resources and political support, not because of the choice of insecticide. Where insect resistance to it is not yet widespread, DDT remains a legitimate weapon against malaria. However, DDT is not a panacea, and the limited restrictions imposed on its use are not a significant factor in the current deplorable state of affairs in malaria morbidity and mortality.

1. Preliminary note

This treatise is a bit of a hybrid between a blog post and a proper academic review. On the one hand, it addresses a rather limited and specific point of contention; on the other, it also tries to provide sufficient background to properly explain and support its position. A note on sources: The historic discussion is based mostly on two excellent books [1,2]. Some of the additional references I consulted on other topics are cited in the text.

2. Malaria

Malaria is an infectious disease of humans that is caused by five different species of the protozoal genus Plasmodium. Among these, Plasmodium falciparum is most abundant globally and causes the most severe disease, whereas infections by P. vivax, P. malariae, P. ovale and P. knowlesi are less frequent and usually milder. Exact numbers for the total disease burden are not available. Estimates vary widely; [4] posits 450 million cases in 2007 as the most likely number, but significantly lower and higher numbers have been suggested [5]. Similar uncertainty pertains to the global distribution; while many sources state that 90% of all cases occur in Africa, [4] estimates that some 30% occur in South and South East Asia, and an additional 10% in other parts of the world. This large uncertainty reflects the paucity of health care and surveillance in the most heavily affected areas.

Malaria is transmitted by numerous mosquito species that all belong to the genus Anopheles. In humans, the parasites injected by the biting mosquito multiply first in the liver and subsequently in red blood cells (RBCs), whose hemoglobin provides them with a rich source of food. Fever attacks are triggered by the synchronized release of progeny parasites from exhausted RBCs, since the immune system reacts to the extracellular parasites. The attack subsides as the parasites enter and hide away within fresh RBCs to repeat the cycle. Anemia and fatigue arise from the depletion of red blood cells, whereas damage to various organs can arise from obstruction to the microcirculation by infected cells and by dysregulation of blood clotting.

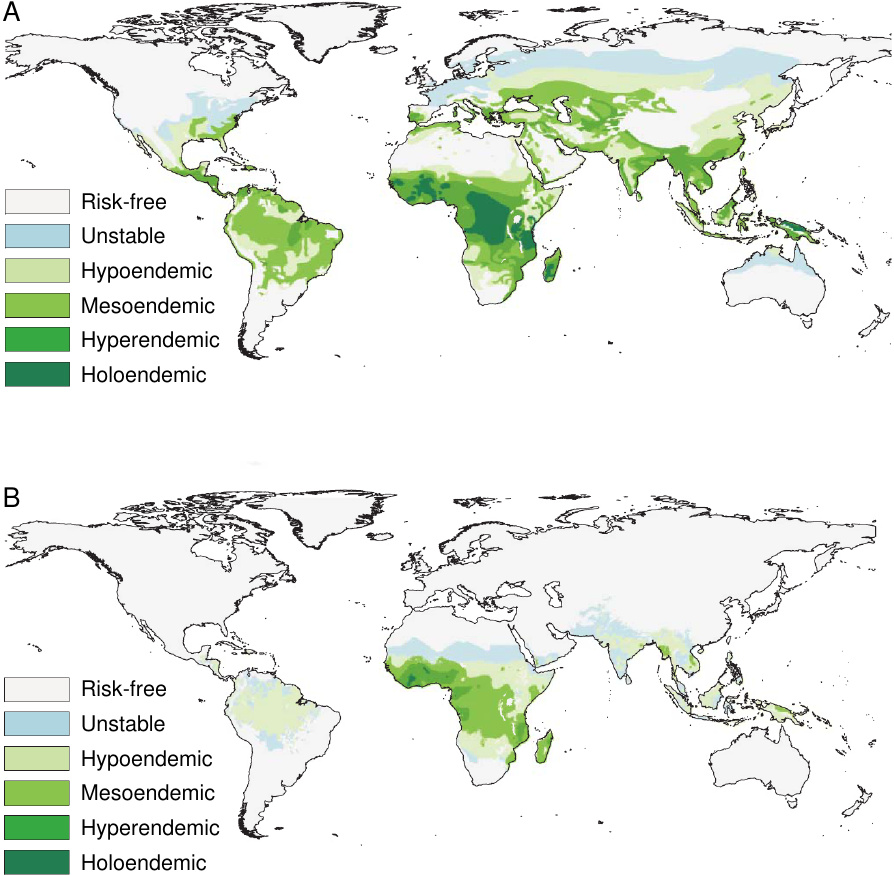

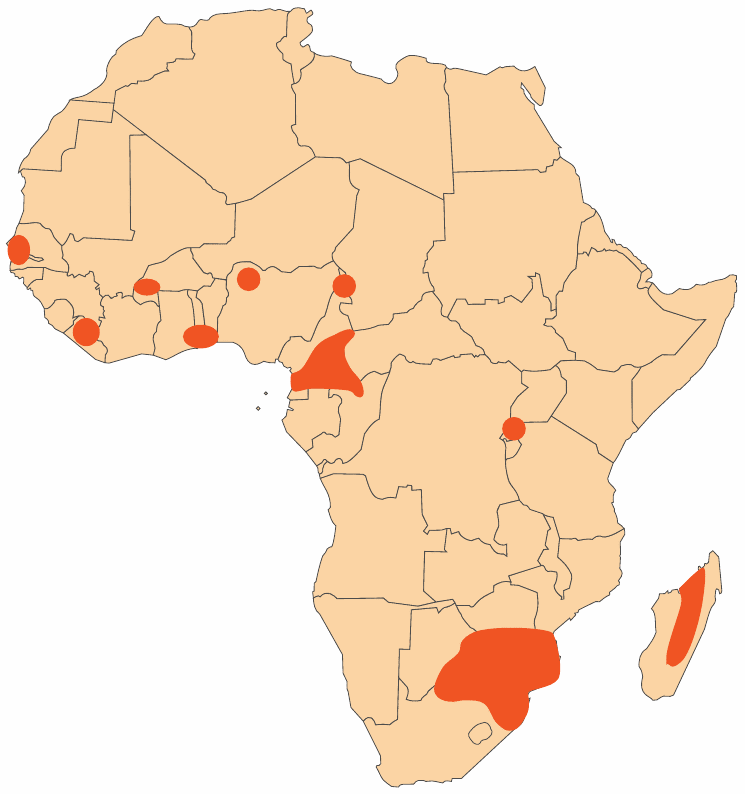

The disease occurs in both tropical and temperate climate zones (see Figure 1). It is, however, more widespread and more entrenched in tropical countries, which is due to both climatic and socio-economic factors. The parasites develop only at temperatures above 16∘C(61∘F). Thus, development within the mosquitoes and subsequent transmission to humans occur year-round in the tropics, but they are seasonally limited in temperate zones. Socio-economic factors include affordability of antimalarial drugs and insecticides, but also the design and maintenance of human dwellings and of landscaping around them, which may afford the mosquitoes with breeding grounds and easy access to human hosts.

Malaria-related parasites and diseases occur in numerous vertebrates; however, the causative agents of human malaria are limited to man as their only vertebrate host. In principle, this should make disease eradication feasible: just as smallpox was eradicated by comprehensive vaccination, it should be possible to wipe out malaria by suppressing multiplication of the parasite in the human host. While immunization against malaria has proven unexpectedly difficult—despite many efforts, which have used the full arsenal of modern genetic and biochemical methods, effective vaccines are lacking to this day [6]—proliferation of the parasite can be inhibited with drugs. Chemoprophylaxis, that is, preventative drug treatment, can be highly effective. The best example of this has been chloroquine, which was widely used for both prevention and treatment until the emergence of widespread resistance in Plasmodium.

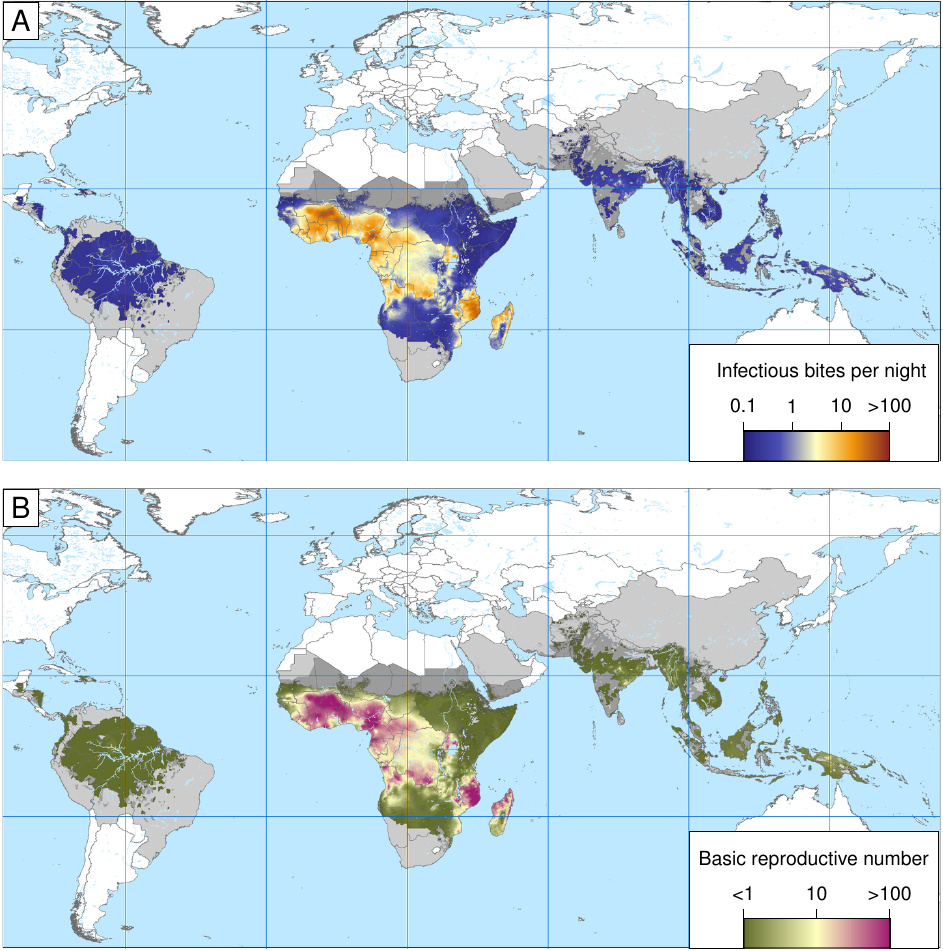

An alternate approach to disease eradication is the use of insecticides. These may be directed either against mosquito larvae, which requires widespread application in the environment, or against the adult forms, in which case the use is mostly limited to human dwellings. While it is of course impossible to prevent every single infectious mosquito from biting, this is also not necessary; it should in principle suffice to reduce the overall likelihood of vector-mediated transmission from one human to another, the so-called basic reproductive number, to less than one, so that the reservoir of malaria-infected humans declines steadily.

Figure 2 illustrates the global variation of the basic reproductive number, as well as of the entomological infection rate, that is, the average number of infectious insect bites per night. Both numbers are astonishingly high in some parts of tropical Africa. The higher these baseline numbers are, the higher the mountain we have to climb when trying to control or even eradicate malaria.

2.1. Endemic and epidemic malaria

In some countries, particularly in sub-Saharan Africa, malaria is endemic, which means that it is continually present in a high percentage of the population. (There are degrees of endemicity, as indicated in Figure 1). Children become infected soon after birth, and many of them succumb; malaria is estimated to be responsible for up to 25% of infant mortality [8]. Survivors develop relative—that is, incomplete—immunity, which is maintained by frequent reinfection. Accordingly, morbidity and mortality among adults and older children are much lower than among newborns and infants. Many people carry more than one Plasmodium species, while others harbor multiple populations of the same species that propagate in the bloodstream on separately synchronized schedules, indicating that they arose through separate transmission events.

Locations with endemic malaria tend to have warm, humid climates, often with multiple indigenous Anopheles mosquito species. These breed in swamps, irrigation canals, ponds, or other bodies of stagnant water close to human settlements. Drainage of swamps, usually carried out to increase arable land, has been a major factor in the successful eviction of malaria from Europe and North America.

In regions where general conditions are not as consistently favorable to its transmission, malaria can take the form of epidemics. These can be triggered through climatic events. An example is the epidemic that visited Ceylon (Sri Lanka) in 1934. The following account of this epidemic is taken from [1] (page 203f; insertions and omissions mine):

In normal years Anopheles culicifacies [the main local vector species] are hard to find. They do not breed in rivers, which are full of fast-flowing water. … When both monsoons failed in 1934, the principal rivers … shrank to strings of pools, and these in the spring began to teem with A. culicifacies. They hatched and bred through the long summer.

Authorities with long experience could see what was coming in the unclouded skies of April and May. At least, they predicted an increase in fever and ordered wider distribution of quinine. But there was really no defense. … Moreover, the people were unusually vulnerable. Following … weather unfavorable … for the breeding of mosquitoes [before the epidemic], the fever had receded … More babies … [had] escaped infection after birth. The proportion of children to adults was thus higher than normal, as was the proportion of non-immune children to immune. … By mid-December, half a million people, or 10% of the Ceylonese population, were ill. During 1935, the epidemic … intensified its fury … at last, in November and December, normal widespread rains refilled the rivers and drowned out the epidemic. By then, nearly a third of the people had fallen ill; nearly 80,000 people died.

As is clear from this description, epidemic malaria may in some ways be worse than the endemic form. While the long-term average of case numbers will be lower, more of these cases will affect people without preexisting immunity, and the affliction of adults will cause greater social disruption, as will the sudden surge of case numbers, which may overwhelm health care workers and supplies.

When trying to wipe out malaria in endemic areas through mosquito control, and only partially succeeding at it, we might find ourselves simply shifting the disease from an endemic to an epidemic pattern, without much gain in terms of life expectancy, and quite possibly negative effects on social stability. Objections of this kind were raised before the large-scale eradication programs were initiated by the WHO; they were, however, overruled. Indeed, such outcomes were repeatedly observed when local malaria control efforts were neglected or abandoned in Africa, and in some instances even before the WHO eradication initiatives [2].

2.2. Early malaria eradication efforts

The first effort to eradicate malaria was undertaken in the late 19th century by Ronald Ross, the chief discoverer of the infectious cycle of malaria. Soon after his discovery, he secured a grant from a wealthy Scottish (!) donor and set out to drive malaria from Freetown, Sierra Leone (then a British colony). With the characteristic energy he had displayed earlier both in his investigations on malaria and in combating an outbreak of cholera in Bangalore, he organized a city-wide effort to drain and fill in any pool or puddle, and to cover any remaining stagnant water with oil so as to suffocate any hatching mosquito larvae.

The attempt was unsuccessful. While this may be ascribed to Ross’ limited powers and reluctant cooperation from the populace, no such explanations are plausible for the resounding failure of a similar but larger, more sustained, and better organized effort, undertaken soon after in Mian Mir, a British military base in India.

The first convincingly successful malaria control project was achieved by Malcolm Watson, then a colonial sanitation officer in the British colony of the Federated Malay States. An equally brilliant and energetic man, Watson did an enormous amount of meticulous field work to identify vector species and to characterize their different requirements for propagation, and then designed proper strategies for larval control and infection avoidance for every one of them.

Watson described his work in several books. One of these [9], which is available from my website, also gives a vivid account of the next major undertaking of malaria control, which took place during the construction of the Panama canal. This work, performed under the energetic command of General William Gorgas, later promoted to US Army Surgeon, marks the first convincing success of large-scale malaria control in a tropical climate. Various measures of insect control, all low-tech but relentlessly applied, effectively wiped out yellow fever, a viral disease that is transmitted by a different mosquito, Aedes aegypti. Case numbers of malaria were greatly reduced, but not brought to zero. To this day, this campaign remains a compelling example not just of possible success, but also of the expense, discipline, and drudgery required to achieve it. For example, when local conditions rendered other means of insect control unworkable, Gorgas resorted to the use mosquito squatting squads, a practice one would more readily associate with the imperial court of ancient Persia.

In Europe, Italy was one of the hot spots of malaria well into the 20th century. Most heavily affected was the south, and in particular Campania, the swampy coastal plain between Rome and Naples, in which destitute farm workers toiled like slaves in all but name. Camped out without protection near the mosquito breeding grounds, they were subject to a heavy toll of disease and death year after year. Clear-sighted physicians advocated for land reform and social change, but without immediate success. Relief, but no eradication came with the onset of state-administered distribution of quinine. It fell to the Fascist government, which came to power in 1922, to take this problem on with energy, with drainage of swamps, land reforms that elevated many dependent workers to small land owners, and mandatory use of insect screens in newly constructed settlements. These policies pushed back the disease, but again did not eradicate it. Malaria spread again during the fighting that took place from 1943 to 1945; eradication was accomplished, with the use of DTT, only after the war. Both before and after the war, the Rockefeller foundation played an important part in fighting malaria in Italy, as it did in many other places [1,10].

3. DDT

DDT (1,1,1-trichloro-2,2-di[4-chlorophenyl]ethane) is one of the most effective insecticides yet invented. It was synthesized by Paul Müller at Geigy in Switzerland in 1939, and soon after was distributed for use against clothes moths and body lice. It is active against a broad spectrum of insects, on both larval and adult stages, since it inhibits a key molecule of nerve and muscle cell function (the voltage-gated sodium channel; [11]). It is cheap to manufacture and chemically quite stable. It is also very lipophilic, which means that it dissolves preferentially in fat, oil, or organic solvents, but is only poorly soluble in water; therefore, it is not easily washed off by wetness but tends to stick around. This is advantageous in practical use, since it allows for long intervals between applications. On the other hand, it favors persistence in the environment as well as accumulation along the food chain.

3.1. Use in malaria eradication programs

Beginning in 1943, DDT was used to combat and even eradicate malaria, first in the Mississippi valley and soon after in Italy, Greece, and several other countries. Encouraged by these results, the WHO (World Health Organization) launched a global eradication program in the early 1950s. The strategy was informed by recent experience, particularly from the Italian island of Sardinia. Here, the indoor use of DDT, intended to disrupt the chain of transmission between host and insect vector, had succeeded in extinguishing malaria. At the same time, DDT and other insecticides had been applied outdoors on a massive scale, but this campaign had failed to exterminate the local Anopheles vector species. Therefore, the WHO program focused on indoor application of DDT; every room in every house was to be thoroughly sprayed with the stuff twice every year.

A complementary measure in the WHO eradication program was the comprehensive surveillance for early detection and prompt treatment of malaria cases. Surveillance involved the preparation and microscopic evaluation of blood smears from all suspected cases. Comprehensive treatment was facilitated by the availability of cheap and effective antimalarial drugs, particularly chloroquine.

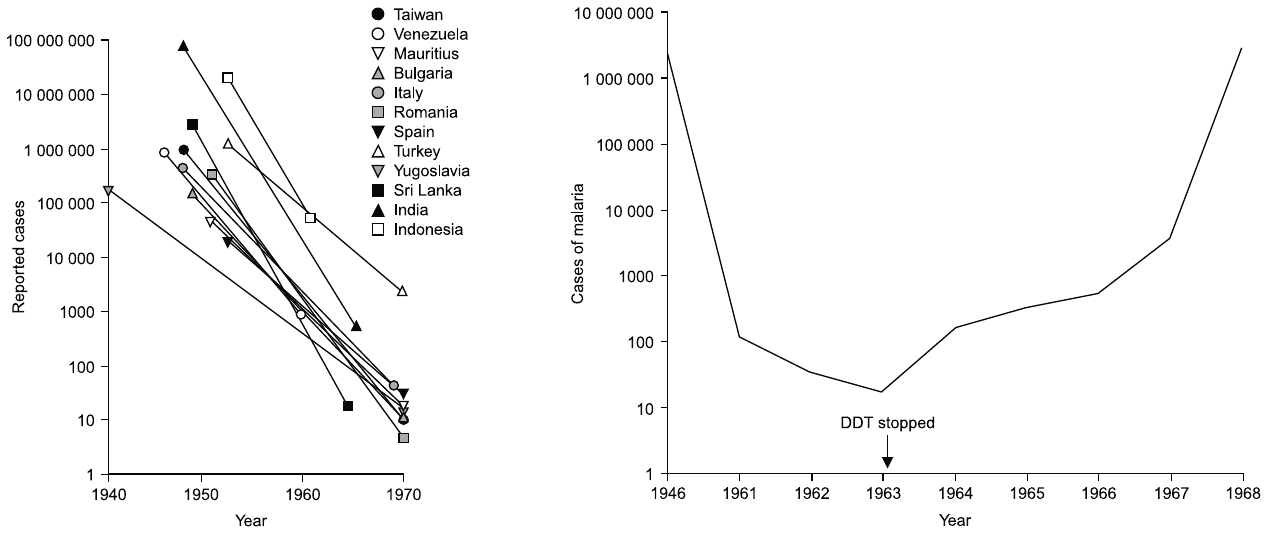

Diligent execution of this program led to successful eradication in several countries, mostly in America and South East Asia, and a dramatic decline in case numbers in many other countries (see Figure 4). Despite these impressive gains, the program ultimately failed in most participating countries. In the sections below, I will discuss some of the difficulties that led to this failure. We can, however, note right here that failure of eradication was officially acknowledged by the WHO, and the goal restated as “control” rather than eradication, already in 1969, that is, three years before environmental concerns culminated in the ban on DDT in the United States (see below).

3.2. Environmental toxicity; ban of agricultural use

I have not researched the question of DDT’s environmental toxicity thoroughly; I did not read Rachel Carson’s famous book “Silent Spring”, nor any of its rebuttals. My reason is that this question is less important to the issue at hand than one might think. While it seems quite possible that large-scale outdoor application of DDT for agricultural use will create excessive pollution and damage to wildlife, the indoor use against malaria involves incomparably smaller amounts overall, and the burden to the environment caused by such limited application will certainly be negligible.

The concerns about environmental damage, first brought to the fore by Carson, persuaded the US Environmental Protection Agency to ban DDT use in 1972. However, this ban only applies to agricultural use. In contrast, DDT use in malaria control was exempted, and it remains permissible to this day under the Stockholm Convention [13]. Indeed, considering that DDT has become something of a poster child for misguided environmentalist activism, it is a little ironic that it is one of very few organochlorides to retain such approval. In contrast, dieldrin and several other organochloride compounds have been banned categorically under the Stockholm convention.

So, how did the limited ban on DDT affect malaria control? Firstly, it is worth noting that DDT never went out of production, and it remains commercially available from suppliers in India and elsewhere. Secondly, the ban on agricultural use may quite possibly have slowed down the development of insect vector resistance and thus preserved the usefulness of DDT for malaria control for longer than would have otherwise been the case.

3.3. Human toxicity

Like environmental toxicity, this question is not really crucial. DDT and some of its degradation products accumulate in human tissues, but most attempts to link DDT and disease remain vague [14], and substantial evidence of toxicity seems to be limited to long-term malaria control workers [15,16]. Such workers would certainly have been exposed to far higher dosages than the inhabitants of houses sprayed with DDT twice per year, which would be a typical schedule; and it should be entirely feasible to protect all personnel from dangerous levels of exposure through the proper use of protective gear.

For people in endemic areas who do not themselves dispense DDT, the health risk of malaria itself will be orders of magnitude greater than that caused by exposure to DDT. Therefore, like environmental toxicity, the health risk of DDT does not provide a rational argument against its use for malaria control, unless superior insecticides are readily available.

3.4. Resistance to DDT

In contrast to environmental and human toxicity, Anopheles resistance to DDT is a serious limitation to its continued use for malaria control. There are two molecular mechanisms of resistance: firstly, point mutations in the target molecule, the voltage-gated sodium channel, reduce its binding affinity for DDT, and secondly, mutations in cytochrome P450 enzymes increase the capacity of the mosquito to degrade the compound.

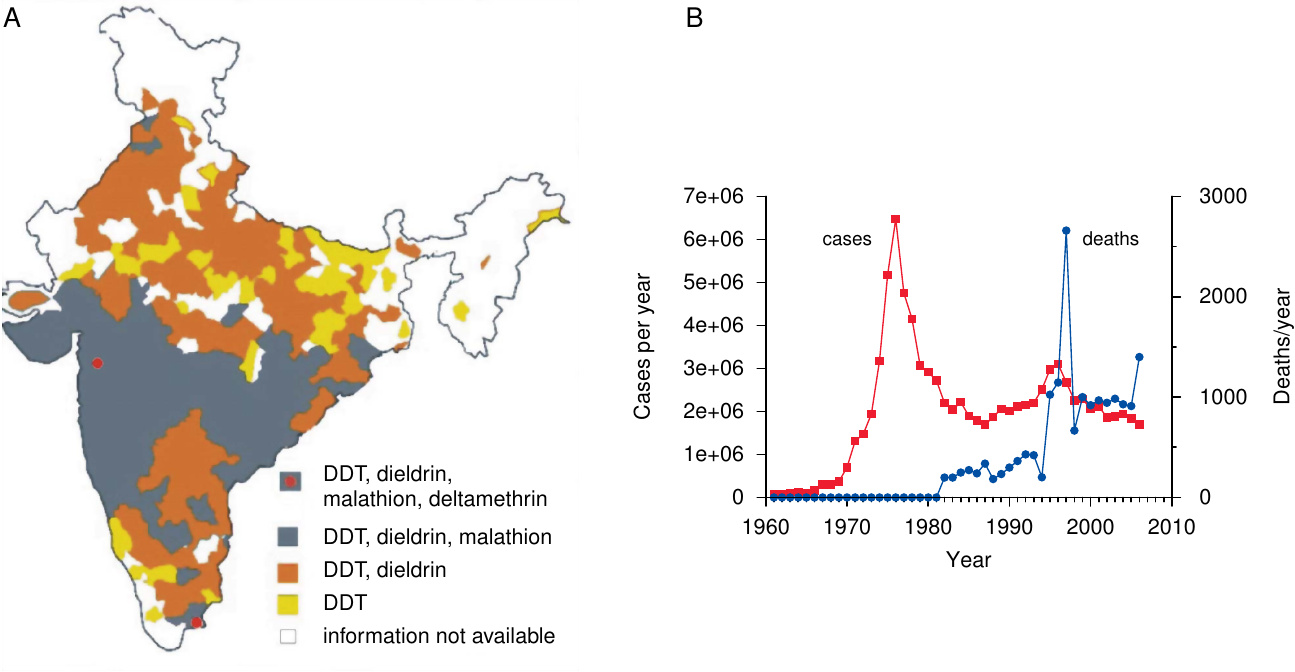

Resistance was observed as early as 1946; it has since become ever more widespread. Figure 5A shows that resistance affects essentially all of India, which country never stopped using DDT since its introduction. While data availability for Africa is limited, resistance is quite common there as well.

The emergence and spreading of resistance to DDT prompted the adoption of several other insecticides for malaria control, and as evident from Figure 5A, resistance to these compounds is also on the march; indeed, some mutations that confer resistance to DDT can also cause cross-resistance to other insecticides.

4. Why did malaria eradication fail?

Looking back on history, one certainly has to admire the clear-sightedness of those who initiated the ambitious eradication program in the 1950s. The availability both of DDT and of cheap, effective antimalarial drugs such as chloroquine and pyrimethamine gave the world its best shot yet at conquering this disease. In several of the countries included in Figure 4A, the scheme indeed worked as intended and achieved eradication. However, in other countries, things did not go as well; an example, Sri Lanka, is also illustrated in the figure. Another notable failure was India, in which malaria forcefully rebounded in the 1970s. Where did things go wrong?

4.1. India and Sri Lanka

Figure 4B shows that case numbers in Sri Lanka rebounded after 1963, the year that general DDT spraying of houses was discontinued. Why, then, did someone decide to stop it in the first place? This was in fact part of the official strategy: after reduction of malaria incidence to below a certain threshold, the focus was to be shifted to detection and medical treatment of malaria cases, that is, from the insect reservoir to the human reservoir of the parasite [19]. Considering that the official nation-wide figure for malaria cases was well below 50 in 1963, it would seem reasonable to conclude that the human reservoir had been brought under control sufficiently for allowing this shift to occur. However, as it turned out, the decision was premature and based on flawed data.

It is obvious that proper execution of such a strategy crucially depends on exact and comprehensive surveillance. The protocols for surveillance and preventative drug dispensation had been laid down in detail by the WHO. Surveillance workers were to visit every home, every two weeks, to take blood samples and administer treatment to every person reporting a fever. In addition, random blood samples were to be taken from 10% of the population every year. Similarly, health practitioners were instructed to take diagnostic samples and administer antimalarial drugs to every fever patient.

Harrison [1] vividly describes the shortcomings and failures in the implementation of this system. He focuses on India and Sri Lanka, but there is no reason to assume that his observations do not apply elsewhere; in fact, the situation is even worse in many African countries (see below). Surveillance workers were underpaid and overworked. Remote and pathless rural areas of both countries were neglected—a situation that seems to prevail even today [20]—and surplus samples collected in more easily accessible locations to cover up the neglect. Understaffed laboratories were swamped with large sample volumes; backlogs ensued, so that patients went undiagnosed and continued to function as disease reservoirs.

Other major crises interfered with proper execution as well. Floods, droughts, famines, cholera, India’s war with Pakistan—such urgent events diverted attention and resources from malaria control. Beginning in the 1970s, soaring oil prices caused rising cost of DDT production. Simultaneously, DDT resistance began to spread, necessitating the use of yet more expensive insecticides such as malathion—now itself afflicted by resistance (see Figure 5A). So did resistance of Plasmodium to the major, cheap antimalarial drugs. The window of opportunity for malaria eradication, which the WHO had correctly identified in the 1950s, and the use of which it had valiantly attempted, had closed.

4.2. Africa

If eradication failed in India and Sri Lanka, it was never even systematically attempted in tropical Africa. Figure 6 shows the very limited scale of control or eradication efforts in Africa as of 1960. This seems to have been the maximum extent in that era; most of those projects were discontinued soon afterwards as former colonies became independent.

Where control/eradication was attempted, it met with a multitude of obstacles. Insecticide spraying was less effective on the walls of mud huts than on other construction materials. Under these conditions, dieldrin and benzene hexachloride initially proved more effective than DDT, but the mosquitoes rapidly developed resistance to both. (DDT resistance was slower to emerge, but it is now common in many parts of Africa as well.) Some indigenous vector species bite outdoors, thus circumventing indoor spraying altogether.

As mentioned earlier, the high abundance of vector mosquitoes raised the bar for success. In one particular trial in West Africa, it was found that “despite a 90% reduction in the vectorial capacity, the prevalence of P. falciparum was reduced by only about 25% on the average” [21]. Similarly, a report prepared for the US government [22] found that “routine larviciding was carried forward, for example, in Kaduna, Nigeria, yet visiting malariologists …concluded that the control measures had a negligible impact on the mosquito problem.” Lack of trained personnel, administrative shortcomings, political turmoil, and warfare further undermined any partial successes that were achieved. Finally, we must not forget that much of the recent past in Africa has been overshadowed by the HIV epidemic. As HIV, as well as accompanying disastrous resurgence of tuberculosis, came into focus, attention and resources were diverted from malaria control.

As long as it remained effective, the drug chloroquine came to be the single most important means of relief. This compound was very cheap to manufacture, and through over-the-counter sales reached millions that had no access to regular medical care. Because of the low price, chloroquine did not attract the interest of counterfeiters, who continue to sabotage the effectiveness of many other anti-infective drugs. Unfortunately, chloroquine resistance is now almost universal.

5. Conclusion

The WHO program to eradicate malaria succeeded in some countries such as Cuba and Taiwan; it failed in others such as India and Sri Lanka, and it was never even properly implemented in large parts of Africa. DDT was an important element of this program, but its significance has since faded due to widespread mosquito resistance. This resistance has most likely been promoted by its use in crop protection, just like resistance to antibiotics has proliferated due to their widespread use in animal husbandry [23]. The ban on the agricultural use of DDT, first promulgated in 1972, came too late to avoid most of this negative impact on malaria control.

Deplorable as the loss of DDT, chloroquine, and other formerly reliable weapons against malaria may be, we should note that success in our fight against epidemic infections hinges not so much on individual compounds as on our capacity to properly implement coherent strategies of disease control. For example, all affluent and well-run countries have managed to eliminate cholera, and to reduce tuberculosis from an endemic disease to a sporadic one. They achieved this through hygiene and surveillance, long before the advent of antibacterial chemotherapy. However, the same diseases have remained widespread in third world countries long after.

To eradicate malaria, we cannot rely alone on novel drugs and insecticides, important as these would be for providing transient relief and mitigation. Ultimately, success will depend on economic development and on peace, on lifting countries out of misery. Looking at the world maps of prosperity and health, it is abundantly clear that both go hand in hand.

References

- Harrison, G.A. (1978) Mosquitos, Malaria and Man: A History of the Hostilities Since 1880 (Dutton).

- Webb, J.L.A. (2014) The Long Struggle against Malaria in Tropical Africa (Cambridge University Press).

- Gething, P.W. et al. (2010) Climate change and the global malaria recession. Nature 465:342-345

- Hay, S.I. et al. (2010) Estimating the global clinical burden of Plasmodium falciparum malaria in 2007. PLoS Med. 7:e1000290

- Breman, J.G. et al. (2004) Conquering the intolerable burden of malaria: what’s new, what’s needed: a summary. Am. J. Trop. Med. Hyg. 71:1-15

- Sherman, I.W. (2009) The elusive malaria vaccine: miracle or mirage? (ASM Press).

- Anonymous, (2006) The malaria atlas project. misc.

- Snow, R.W. et al. (2001) The past, present and future of childhood malaria mortality in Africa. Trends Parasitol. 17:593-597

- Watson, M. (1915) Rural sanitation in the tropics (John Murray).

- Stapleton, D.H. (2004) Lessons of history? Anti-malaria strategies of the International Health Board and the Rockefeller Foundation from the 1920s to the era of DDT. Public Health Rep. 119:206-215

- Davies, T.G.E. et al. (2007) DDT, pyrethrins, pyrethroids and insect sodium channels. IUBMB Life 59:151-162

- Baird, J.K. (2000) Resurgent malaria at the millennium: control strategies in crisis. Drugs 59:719-743

- N, N. (2001) Stockholm convention on persistent organic pollutants. misc.

- Eskenazi, B. et al. (2009) The Pine River statement: human health consequences of DDT use. Environ. Health Perspect. 117:1359-1367

- Rogan, W.J. and Chen, A. (2005) Health risks and benefits of bis(4-chlorophenyl)-1,1,1-trichloroethane (DDT). Lancet 366:763-773

- van Wendel de Joode, B. et al. (2001) Chronic nervous-system effects of long-term occupational exposure to DDT. Lancet 357:1014-1016

- Dash, A.P. et al. (2008) Malaria in India: challenges and opportunities. J. Biosci. 33:583-592

- Kumar, A. et al. (2007) Burden of malaria in India: retrospective and prospective view. Am. J. Trop. Med. Hyg. 77:69-78

- Macdonald, G. (1965) Eradication of Malaria. Public Health Rep. 80:870-879

- Singh, N. et al. (2009) Fighting malaria in Madhya Pradesh (Central India): are we losing the battle?. Malar. J. 8:93

- Zahar, A.R. (1984) Vector control operations in the African context. Bull. World Health Organ. 62 Suppl:89-8100

- Rozeboom, L.E. et al. (1975) USAID, Office of Health: Report of Consultants—{}African Malaria. misc.

- Shea, K.M. (2003) Antibiotic resistance: what is the impact of agricultural uses of antibiotics on children’s health?. Pediatrics 112:253-258